Open Source - Page 6 of 7

Open-source AI projects, community innovations, collaborative development, and freely accessible AI tools and frameworks.

Open-source AI projects, community innovations, collaborative development, and freely accessible AI tools and frameworks.

The artificial intelligence arms race is heating up, and the United States is betting big on open-source technology as its strategic counterweight to China's technological ambitions.

In a bold move to give users more control over their digital experience, TikTok is testing a novel approach to content curation.

The AI regulatory landscape is heating up in Washington, with Republican leadership exploring aggressive federal intervention to prevent a patchwork of state-level restrictions.

Data scientists hunting for practical, hands-on Python training just got a serious boost.

The world of artificial intelligence just got a lot more immersive. A new open-source project called Marble AI is pushing the boundaries of generative technology, promising to transform simple text, images, or video clips into fully realized 3D...

OpenAI's latest documentation drop signals a subtle but significant shift in how developers and researchers interact with large language models.

Planning a holiday party in 2025 just got easier, and weirder, thanks to ChatGPT's latest trick.

The UK is taking a hard line against potential AI-driven child exploitation, targeting technology's dark potential before it emerges.

Meta just raised the bar for multilingual speech technology. The company's new open-source Artificial Intelligence project aims to break down language barriers on an unusual scale, targeting over 1,600 languages with a massive audio dataset.

The coding AI race just got more competitive. Moonshot AI's latest large language model, Kimi K2, is turning heads with impressive performance on complex software engineering benchmarks.

Cybersecurity experts are racing to fortify AI systems against a growing threat that could compromise machine learning models' reliability and safety.

Data science tutorials often gloss over the messy realities of real-world datasets. Missing values can derail even the most carefully planned machine learning project, turning promising models into statistical nightmares.

Training massive AI models just got a serious speed boost. PyTorch has unveiled a breakthrough approach to handling Mixture of Experts (MoE) architectures on NVIDIA's high-performance DGX H100 systems, potentially democratizing advanced machine...

The open-source AI landscape just got a major shake-up. Moonshot's K2 Thinking model has emerged as a surprising frontrunner, challenging expectations about artificial intelligence development outside big tech's walled gardens.

The global education landscape is facing a critical challenge that could reshape learning for generations. Developing nations are confronting a stark reality: classrooms worldwide are running dangerously low on qualified teachers.

In a strategic pivot that could reshape its AI ambitions, Apple is set to integrate Google's Gemini technology into its next-generation intelligent assistant.

The AI development race is heating up, with Google Cloud making a bold move to attract developers hungry for flexible AI agent creation tools.

Data extraction just got a whole lot smarter. A new open-source tool called LangExtract is transforming how developers and researchers pull meaningful information from web sources, documents, and text lists using advanced AI techniques.

Cybersecurity is getting smarter, and faster, thanks to an unlikely partnership between CrowdStrike and NVIDIA.

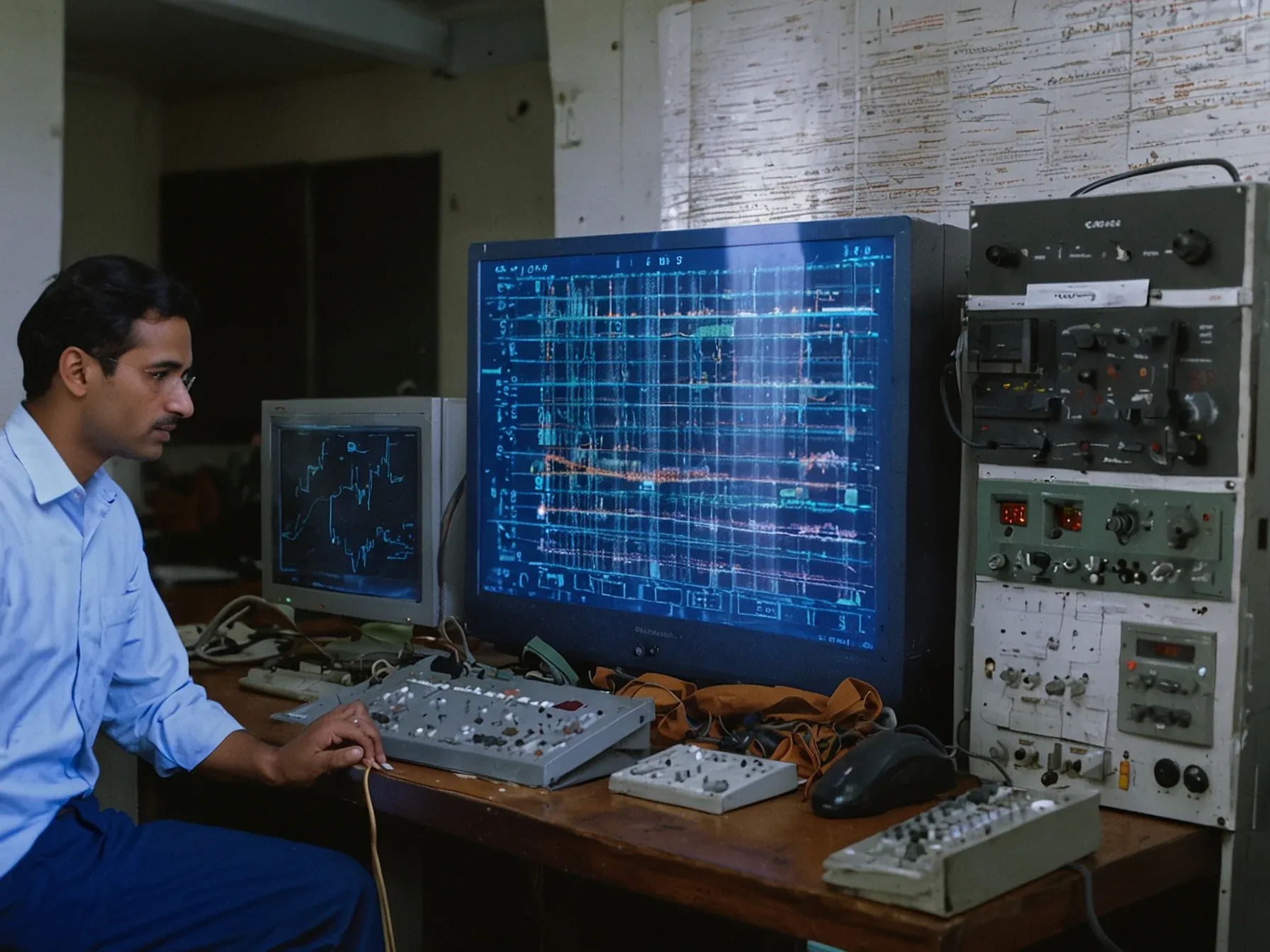

Speech recognition technology is taking a bold step forward in India, with researchers pushing the boundaries of linguistic inclusivity.

Learn to build AI-powered apps without coding. Our comprehensive review of No Code MBA's course.

Curated collection of AI tools, courses, and frameworks to accelerate your AI journey.

Get the week's most important AI news delivered to your inbox every week.