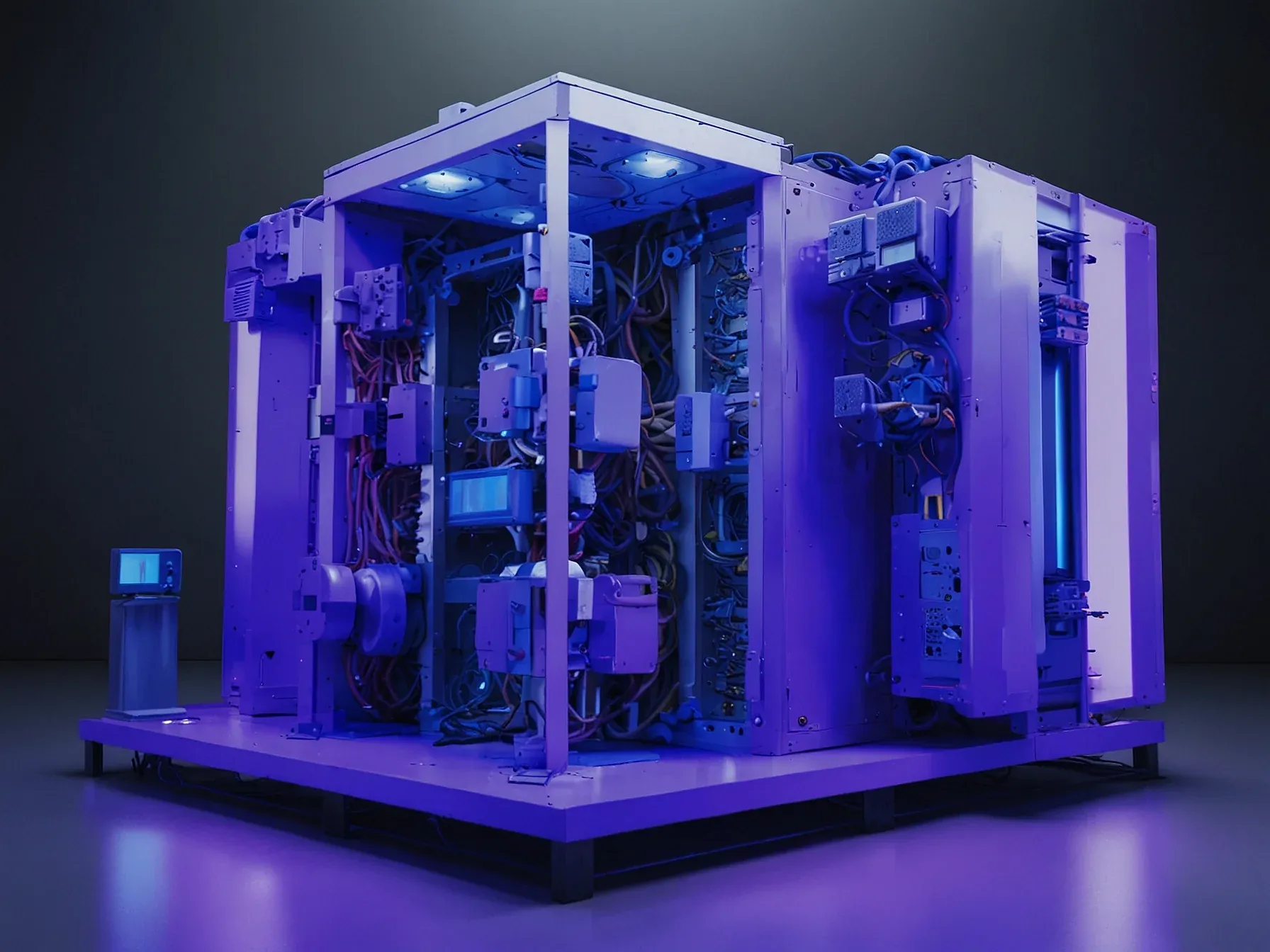

Editorial illustration for Transformer Decoder's New Attention Layer Sharpens Neural Network Performance

Transformer Decoder Breakthrough Boosts Neural Network Power

Decoder adds attention layer to refine encoder output in Transformers vs MoE

Neural networks are getting smarter, but not all performance boosts are created equal. Transformer architectures have long wrestled with how to process complex information efficiently, and a breakthrough in decoder design might just change the game.

Researchers have discovered a nuanced approach to refining how neural networks interpret and prioritize data. The key? An new attention mechanism that allows deeper, more targeted analysis of encoder outputs.

This isn't just another incremental tweak. The new method fundamentally shifts how transformers parse and understand information, potentially opening doors for more precise machine learning models.

By strategically inserting an additional attention layer, engineers are giving neural networks a more sophisticated way to filter and focus on critical data points. The result could mean significant improvements in everything from language processing to complex computational tasks.

Curious how this technical idea actually works? The details reveal a fascinating approach to computational intelligence.

The decoder uses these two parts as well, but it has an extra attention layer in between. That extra layer lets the decoder focus on the most relevant parts of the encoder output, similar to how attention worked in classic seq2seq models. If you want a detailed understanding of Transformers, you can check out this amazing article by Jay Alammar.

He explains everything about Transformers and self-attention in a clear and comprehensive way. He covers everything from basic to advanced concepts. Transformers work best when you need to capture relationships across a sequence and you have enough data or a strong pretrained model.

Neural networks just got a bit smarter. The transformer decoder's new attention layer represents a subtle but significant refinement in how machine learning models process information.

By introducing an extra attention mechanism between encoder and decoder stages, these models can now more precisely filter and focus on the most relevant data points. This approach mirrors classic sequence-to-sequence architectures but with enhanced precision.

The additional layer neededly acts like a sophisticated filter, allowing the decoder to zero in on the most critical elements of the encoder's output. It's a targeted approach that could improve computational efficiency and accuracy.

While the technical details are complex, the core idea is straightforward: better signal selection. The decoder can now dynamically determine which encoder-generated information matters most for its specific task.

Researchers interested in the deeper mechanics might want to explore Jay Alammar's full breakdown of transformer architectures. His work provides an accessible entry point into understanding these intricate neural network designs.

The attention layer isn't revolutionary, but it's a smart optimization that could incrementally improve machine learning model performance across various applications.

Further Reading

- Related coverage from Dailydoseofds - Dailydoseofds

- Related coverage from Cameronrwolfe - Cameronrwolfe

- Related coverage from Magazine - Magazine

- Related coverage from Labs - Labs

- Related coverage from Openreview - Openreview

Common Questions Answered

How does the new attention layer in transformer decoders improve neural network performance?

The new attention mechanism allows the decoder to focus more precisely on the most relevant parts of the encoder output. By introducing an extra attention layer between encoder and decoder stages, neural networks can now filter and prioritize data points with enhanced precision.

What makes the new transformer decoder attention mechanism unique compared to previous models?

The breakthrough lies in the additional attention layer that enables deeper, more targeted analysis of encoder outputs. This approach mirrors classic sequence-to-sequence models but provides more sophisticated data interpretation capabilities.

Why are researchers excited about the new attention layer in neural network architectures?

The new attention mechanism represents a subtle but significant refinement in how machine learning models process complex information. By allowing more precise filtering and focusing on relevant data points, neural networks can potentially improve their overall performance and accuracy.