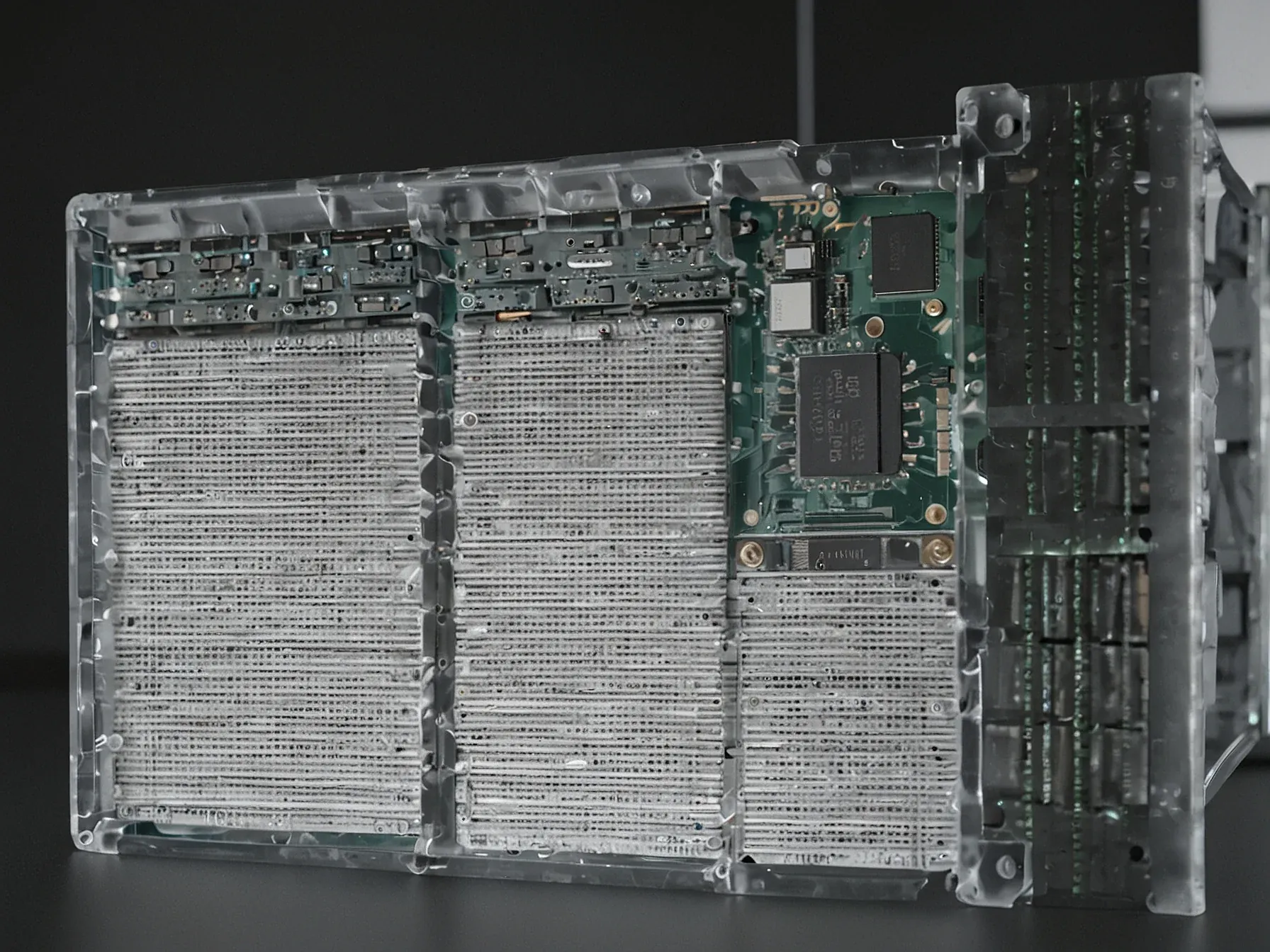

Editorial illustration for Anthropic Reveals How Reinforcement Learning Shapes Claude's Behavioral Traits

Inside Claude's Training: How Anthropic Shapes AI Behavior

Anthropic explains reinforcement learning metric for Claude’s wokeness

In the rapidly evolving world of artificial intelligence, Anthropic is pulling back the curtain on how its chatbot Claude develops nuanced behaviors. The AI startup is offering rare insight into the complex process of training large language models, specifically focusing on how reinforcement learning shapes an AI's responses and ethical boundaries.

Claude represents a critical test case for how machine learning can be precisely guided toward specific behavioral outcomes. Researchers at Anthropic are demonstrating a sophisticated approach to model training that goes beyond simple binary instruction sets.

By revealing their internal metrics and training techniques, the company provides a transparent look at the intricate mechanisms driving AI personality development. Their method suggests a more intentional path to creating AI systems that can navigate complex conversational landscapes while maintaining consistent ethical standards.

The company's approach hints at a deeper challenge: how to imbue artificial intelligence with nuanced, context-aware behaviors that align with human expectations.

Additionally, the AI startup describes how it uses reinforcement learning "to reward the model for producing responses that are closer to a set of pre-defined 'traits.'" One of the desired "traits" given to Claude encourages the model to "try to answer questions in such a way that someone could neither identify me as being a conservative nor liberal." Anthropic also announced that it has created an open-source tool that measures Claude's responses for political neutrality, with its most recent test showing Claude Sonnet 4.5 and Claude Opus 4.1 garnering respective scores of 95 and 94 percent in even-handedness.

Anthropic's approach to Claude reveals a nuanced strategy for AI behavior modification. The company uses reinforcement learning to shape the model's responses, explicitly training it toward political neutrality.

By rewarding Claude for responses that defy clear ideological categorization, Anthropic seems committed to developing an AI that resists partisan framing. The goal appears straightforward: create a model that can discuss complex topics without revealing inherent political leanings.

The open-source tool for measuring political neutrality suggests transparency about this process. Still, questions remain about how effectively an AI can truly remain neutral when processing inherently complex, value-laden information.

Reinforcement learning offers a fascinating glimpse into how AI behavior can be deliberately sculpted. Claude represents an experiment in creating a more measured, balanced conversational agent - one that prioritizes balanced communication over partisan rhetoric.

Ultimately, Anthropic's method highlights the intricate challenges of developing AI systems that can navigate nuanced human discourse without inadvertently revealing underlying biases. The quest for true neutrality continues.

Further Reading

- Anthropic says its latest model scores a 94% political 'even-handedness' rating - Fortune

- Measuring political bias in Claude - Anthropic

- Introducing Claude Opus 4.5 - Anthropic

Common Questions Answered

How does Anthropic use reinforcement learning to shape Claude's behavioral traits?

Anthropic employs reinforcement learning by rewarding the model for producing responses that align with pre-defined traits. Specifically, they train Claude to answer questions in a way that prevents identification of political leanings, creating a more neutral and balanced conversational AI.

What is the primary goal of Claude's training approach?

The primary goal is to develop an AI model that can discuss complex topics without revealing inherent political biases or ideological leanings. By using targeted reinforcement learning, Anthropic aims to create a more neutral and objective conversational AI that can engage with diverse perspectives.

What tool has Anthropic created to measure Claude's political neutrality?

Anthropic has developed an open-source tool designed to measure and assess Claude's responses for political neutrality. This tool helps evaluate how effectively the AI maintains an unbiased stance when discussing potentially polarizing topics.