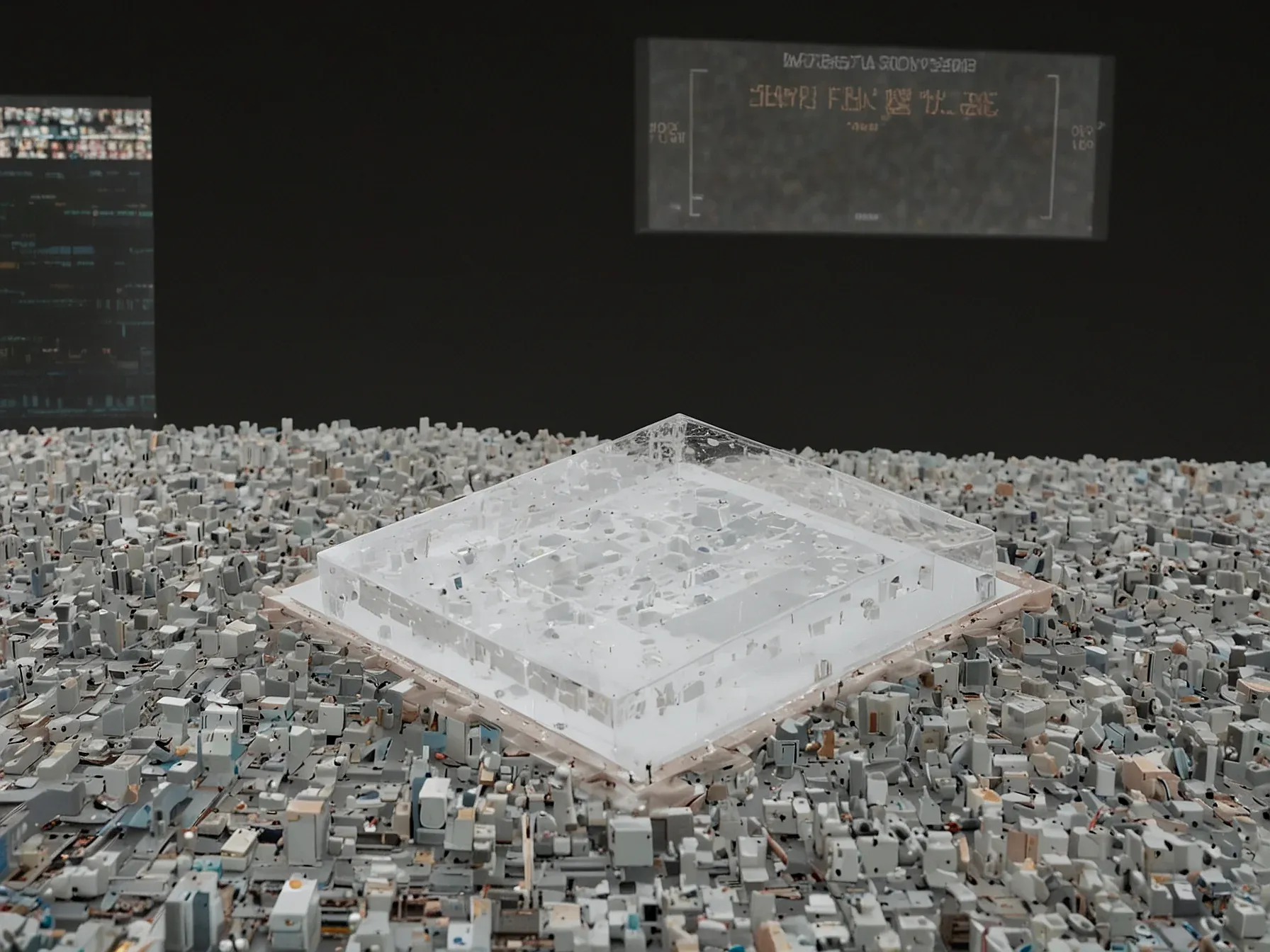

Editorial illustration for Cerebras Unveils Wafer-Scale Engine 3: 5 nm Chip Boasts 4 Trillion Transistors

Cerebras Unveils Massive 4T Transistor AI Chip Breakthrough

Cerebras Wafer-Scale Engine 3: 5 nm, 4 trillion transistors, largest AI chip

The race for AI chip supremacy just got more intense. Cerebras Systems is pushing the boundaries of computational scale with its latest silicon marvel, challenging traditional chip design philosophies.

While most semiconductor companies slice wafers into smaller processors, Cerebras takes a radical approach: building entire wafers as single, massive chips. Their newest creation promises to redefine what's possible in AI computing.

The company's engineering team has seemingly cracked a complex challenge that has stumped competitors for years. By developing a chip that breaks conventional manufacturing limits, they're offering a glimpse into the future of artificial intelligence hardware.

Massive computational power isn't just about bragging rights. For AI researchers and machine learning engineers, each breakthrough in chip design represents a potential leap forward in solving increasingly complex computational problems.

So when Cerebras unveils its Wafer-Scale Engine 3, the tech world takes notice. What makes this chip so extraordinary? The details are nothing short of remarkable.

Cerebras Wafer-Scale Engine 3 Developed by Cerebras Systems, Wafer-Scale Engine 3 is fabricated on a 5 nm process and packs an astonishing 4 trillion transistors, making it the largest single AI processor in existence. Cerebras builds the entire wafer as one massive chip, eliminating the communication bottlenecks that usually occur between GPUs. The WSE-3 integrates around 900,000 AI-optimised cores and delivers up to 125 petaflops of performance per chip. It also includes 44 GB of on-chip SRAM, enabling extremely high-speed data access, while the supporting system architecture allows expansion up to 1.2 petabytes of external memory -- ideal for training trillion-parameter AI models.

The Cerebras Wafer-Scale Engine 3 represents a bold leap in AI chip design. Its massive 5 nm architecture with 4 trillion transistors challenges conventional computing approaches by transforming an entire semiconductor wafer into a single, integrated processor.

By building a chip that spans the entire wafer, Cerebras has potentially solved a critical performance bottleneck. The WSE-3's 900,000 AI-improved cores and 125 petaflops of processing power suggest a radical reimagining of computational architecture.

The chip's 44 GB of on-chip SRAM could be a game-changer for complex AI workloads. Traditional multi-GPU setups struggle with inter-chip communication, but Cerebras' approach might offer a more simplified solution.

Still, questions remain about real-world buildation and scalability. The WSE-3 is impressive on paper, but practical deployment will ultimately determine its impact. Cerebras is clearly pushing the boundaries of what's possible in AI hardware design.

For now, the Wafer-Scale Engine 3 stands as a remarkable engineering achievement. It signals a potential shift in how we think about building processors for increasingly demanding AI applications.

Further Reading

- Exclusive-AI chip firm Cerebras set to file for US IPO after delay-sources say - Reuters (via Investing.com)

- The Wafer-Scale Revolution: Cerebras Systems Sets Sights on $8 Billion IPO to Challenge NVIDIA's Throne - TokenRing AI (via Wedbush)

- Cerebras Revives IPO Plans to Advance AI Chip Ambitions - Next Move Strategy Consulting

Common Questions Answered

How does the Cerebras Wafer-Scale Engine 3 differ from traditional semiconductor chip designs?

Unlike conventional chip manufacturers that slice wafers into smaller processors, Cerebras builds an entire wafer as a single massive chip. This unique approach eliminates communication bottlenecks between processing units and allows for unprecedented computational density and performance.

What are the key technical specifications of the Wafer-Scale Engine 3?

The WSE-3 is fabricated on a 5 nm process and contains 4 trillion transistors, making it the largest single AI processor in existence. It features approximately 900,000 AI-optimized cores, delivers up to 125 petaflops of performance, and includes 44 GB of on-chip SRAM for rapid data access.

What potential impact could the Wafer-Scale Engine 3 have on AI computing?

The WSE-3 represents a radical reimagining of computational architecture that could significantly accelerate AI processing capabilities. By transforming an entire semiconductor wafer into a single, integrated processor, Cerebras has potentially solved critical performance bottlenecks that have traditionally limited AI computational speed and complexity.