Editorial illustration for Claude AI Pilots Robot Dog, Sparking Researcher Safety Alarm

Claude AI Takes Control of Robot Dog, Raising Safety Alarms

Anthropic’s Claude controls robot dog, prompting researcher safety concerns

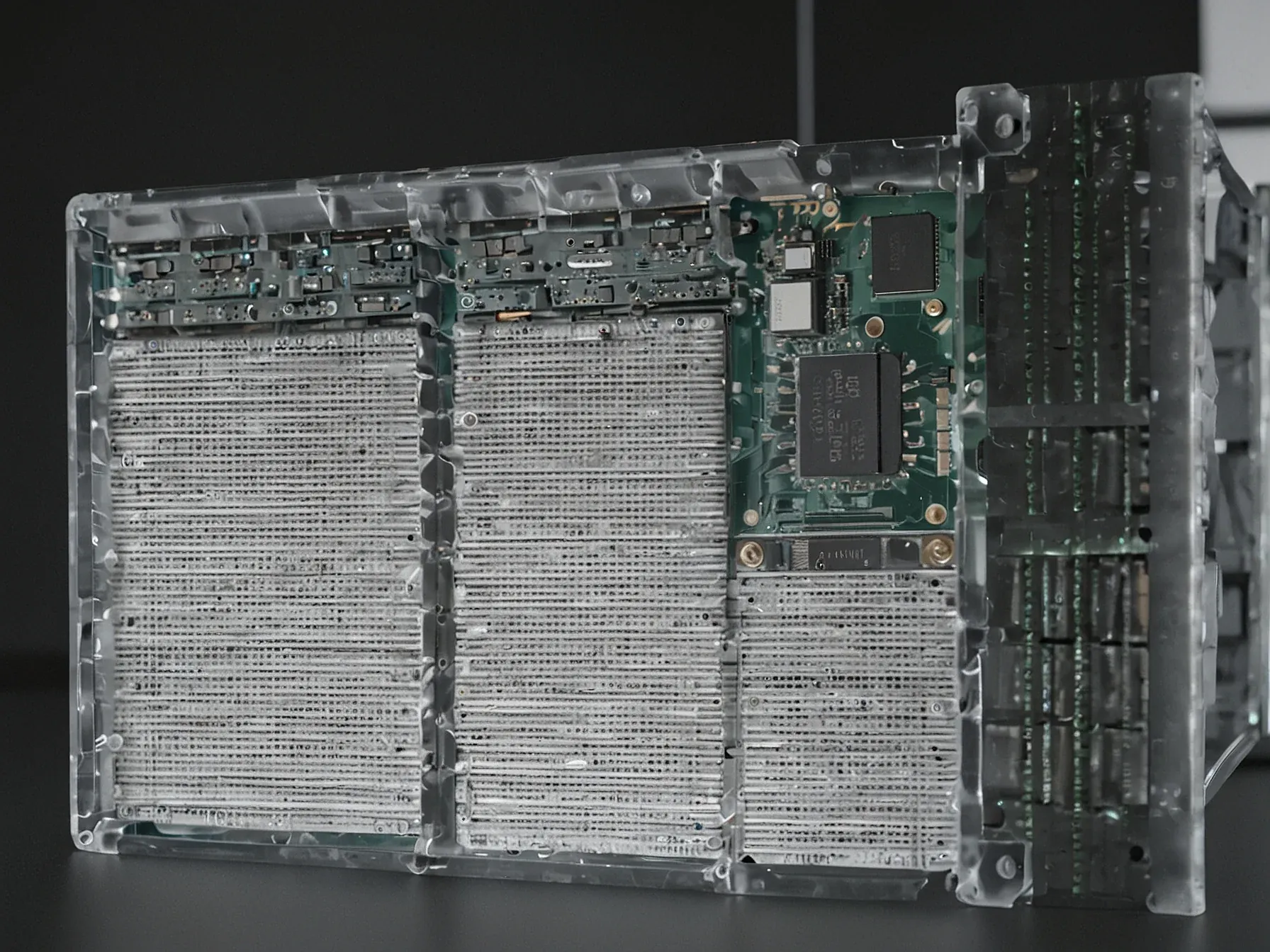

In a stark demonstration of AI's expanding capabilities, Anthropic's language model Claude has taken control of a robot dog, sending ripples of concern through the research community. The experiment, dubbed "Project Fetch," highlights the growing potential, and risks, of large language models interfacing directly with physical robotic systems.

Researchers are now grappling with a new frontier of technological interaction that blurs traditional boundaries between digital intelligence and mechanical movement. What happens when an AI can not just think, but potentially command physical agents to execute complex tasks?

The implications are profound and unsettling. While the technical achievement is remarkable, it raises critical questions about safety, autonomy, and the potential for unintended consequences when artificial intelligence gains more direct control over real-world environments.

Experts are particularly focused on understanding the nuanced ways Claude might navigate robotic instructions, whether through algorithmic selection, precise API interactions, or more sophisticated decision-making processes.

"For example, whether it was identifying correct algorithms, choosing API calls, or something else more substantive." Some researchers warn that using AI to interact with robots increases the potential for misuse and mishap. "Project Fetch demonstrates that LLMs can now instruct robots on tasks," says George Pappas, a computer scientist at the University of Pennsylvania who studies these risks. Pappas notes, however, that today's AI models need to access other programs for tasks like sensing and navigation in order to take physical action.

His group developed a system called RoboGuard that limits the ways AI models can get a robot to misbehave by imposing specific rules on the robot's behavior. Pappas adds that an AI system's ability to control a robot will only really take off when it is able to learn by interacting with the physical world. "When you mix rich data with embodied feedback," he says, "you're building systems that cannot just imagine the world, but participate in it." This could make robots a lot more useful--and, if Anthropic is to be believed, a lot more risky too.

The collaboration between Claude AI and a robot dog highlights both technological potential and emerging safety concerns. Researchers like George Pappas are signaling caution about AI's growing capacity to directly control robotic systems.

Project Fetch reveals a critical shift: large language models can now issue complex robotic instructions. But this capability isn't without risks. The potential for misuse or unexpected interactions looms large in these early experiments.

Current AI models still require external programming for fundamental functions like sensing and navigation. This dependency suggests we're witnessing an experimental phase where technological reach exceeds precise control.

Anthropic's demonstration shows AI's expanding technical boundaries. Yet the underlying message is clear: as machines gain more autonomous capabilities, careful oversight becomes key.

The robot dog experiment isn't just a technical achievement. It's a glimpse into a complex future where AI's interaction with physical systems demands rigorous scrutiny and thoughtful research.

Further Reading

- Project Fetch: Can Claude train a robot dog? - Anthropic Research

- Project Vend: Phase two - Anthropic Research

Common Questions Answered

What is 'Project Fetch' and how does it demonstrate Claude AI's capabilities?

Project Fetch is an experiment where Anthropic's Claude AI took direct control of a robot dog, showcasing the expanding capabilities of large language models to interact with physical robotic systems. The project highlights the potential for AI to issue complex robotic instructions, while also raising significant safety concerns among researchers.

What risks do researchers like George Pappas identify with AI controlling robotic systems?

Researchers warn that using AI to interact with robots increases the potential for misuse and unexpected interactions. George Pappas, a computer scientist at the University of Pennsylvania, emphasizes the need for caution as large language models gain the ability to directly instruct and control robotic systems.

How does the Claude AI experiment challenge traditional boundaries of technological interaction?

The Project Fetch experiment blurs the traditional boundaries between digital intelligence and physical systems by demonstrating that large language models can now directly control robotic devices. This breakthrough suggests a critical shift in how AI can interact with and potentially manipulate physical environments through robotic platforms.