Editorial illustration for Google Unveils Next-Gen AI Chips, Secures Anthropic Partnership in Major Tech Leap

Google's AI Chips Supercharge Anthropic Partnership

Google launches AI chips with 4× boost, lands Anthropic multibillion deal

Google is making bold moves in the artificial intelligence hardware race. The tech giant just unveiled next-generation AI chips that promise a significant performance leap, while simultaneously securing a major partnership with AI startup Anthropic.

The new chips represent more than just incremental improvement. Industry sources suggest they deliver a quadruple performance boost, positioning Google as a serious contender in the high-stakes AI infrastructure market.

But the real story goes beyond raw computing power. Google's strategic alliance with Anthropic signals a deeper commitment to AI development, with potential multibillion-dollar implications for how advanced machine learning models will be built and deployed.

The company's approach isn't just about speed, it's about efficiency and strategic positioning. By developing proprietary technology and forming key partnerships, Google is showing it's playing a long-term game in the rapidly evolving AI landscape.

These developments suggest Google isn't just participating in the AI revolution, it's actively trying to shape its future. And the stakes couldn't be higher.

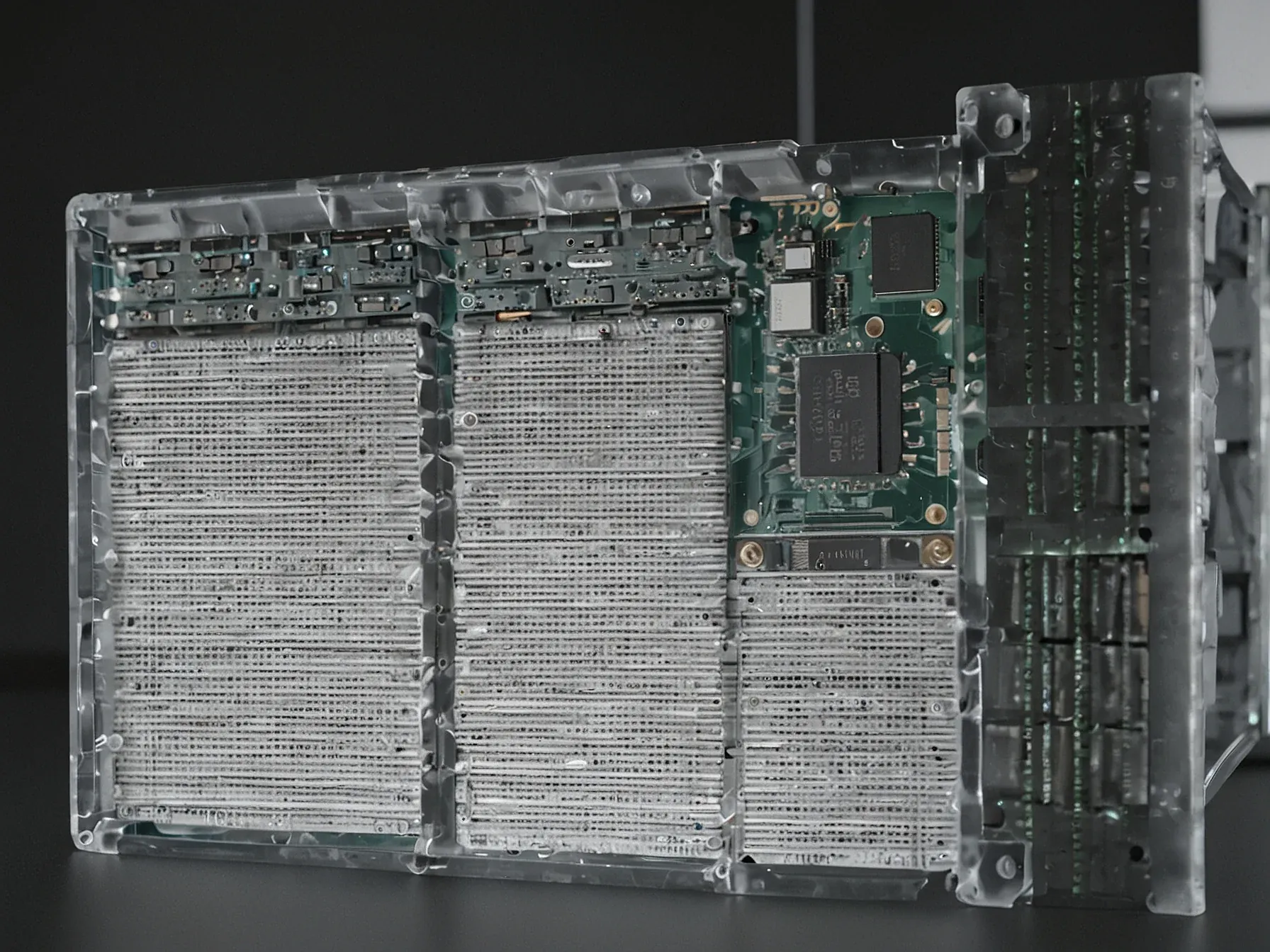

The company specifically cited TPUs' "price-performance and efficiency" as key factors in the decision, along with "existing experience in training and serving its models with TPUs." Industry analysts estimate that a commitment to access one million TPU chips, with associated infrastructure, networking, power, and cooling, likely represents a multi-year contract worth tens of billions of dollars -- among the largest known cloud infrastructure commitments in history. James Bradbury, Anthropic's head of compute, elaborated on the inference focus: "Ironwood's improvements in both inference performance and training scalability will help us scale efficiently while maintaining the speed and reliability our customers expect." Google's Axion processors target the computing workloads that make AI possible Alongside Ironwood, Google introduced expanded options for its Axion processor family -- custom Arm-based CPUs designed for general-purpose workloads that support AI applications but don't require specialized accelerators.

Google's latest move signals a bold strategic pivot in AI infrastructure. The company's new TPU chips promise a significant 4× performance boost, positioning them as a serious contender in the rapidly evolving AI hardware market.

The Anthropic partnership represents more than just a technology deal. It's a multibillion-dollar commitment that could reshape cloud computing and AI model development for years to come.

Price-performance and efficiency emerged as critical decision factors for both companies. Google's existing expertise in TPU training and model serving likely gave them a competitive edge in securing this landmark agreement.

Industry analysts suggest the infrastructure commitment, potentially involving one million chips, could be among the largest cloud investments in tech history. Such a massive scale hints at the immense computational demands of next-generation AI systems.

While the full implications remain uncertain, one thing stands clear: Google is making a substantial bet on AI's future. The partnership with Anthropic and these advanced chips could be a key moment in the company's technological trajectory.

Further Reading

- Broadcom Secures $21 Billion AI Chip Deal with Anthropic - TMTPOST

- Anthropic reportedly raising $10B at $350B valuation - TechCrunch

- The latest circular AI deal stars Anthropic, Nvidia, and Microsoft - AOL

- The Brief: Anthropic's $10B Raise + Zhipu's Historic IPO - AI Collective

- Anthropic reportedly lining up $10B financing as AI funding race accelerates - InvestmentNews

Common Questions Answered

How do Google's new TPU chips improve AI infrastructure performance?

Google's next-generation TPU chips promise a quadruple performance boost over previous generations. These chips are designed to significantly enhance AI computing capabilities, positioning Google as a serious contender in the high-stakes AI hardware market.

What are the key details of Google's partnership with Anthropic?

The partnership involves a multi-year commitment to provide Anthropic with access to one million TPU chips and associated infrastructure. Industry analysts estimate this deal could be worth tens of billions of dollars, representing one of the largest known cloud infrastructure commitments in history.

What factors influenced Google's TPU chip development and Anthropic partnership?

Google specifically cited the TPUs' price-performance and efficiency as critical decision factors. The company's existing experience in training and serving AI models with TPUs played a significant role in both the chip development and the strategic partnership with Anthropic.