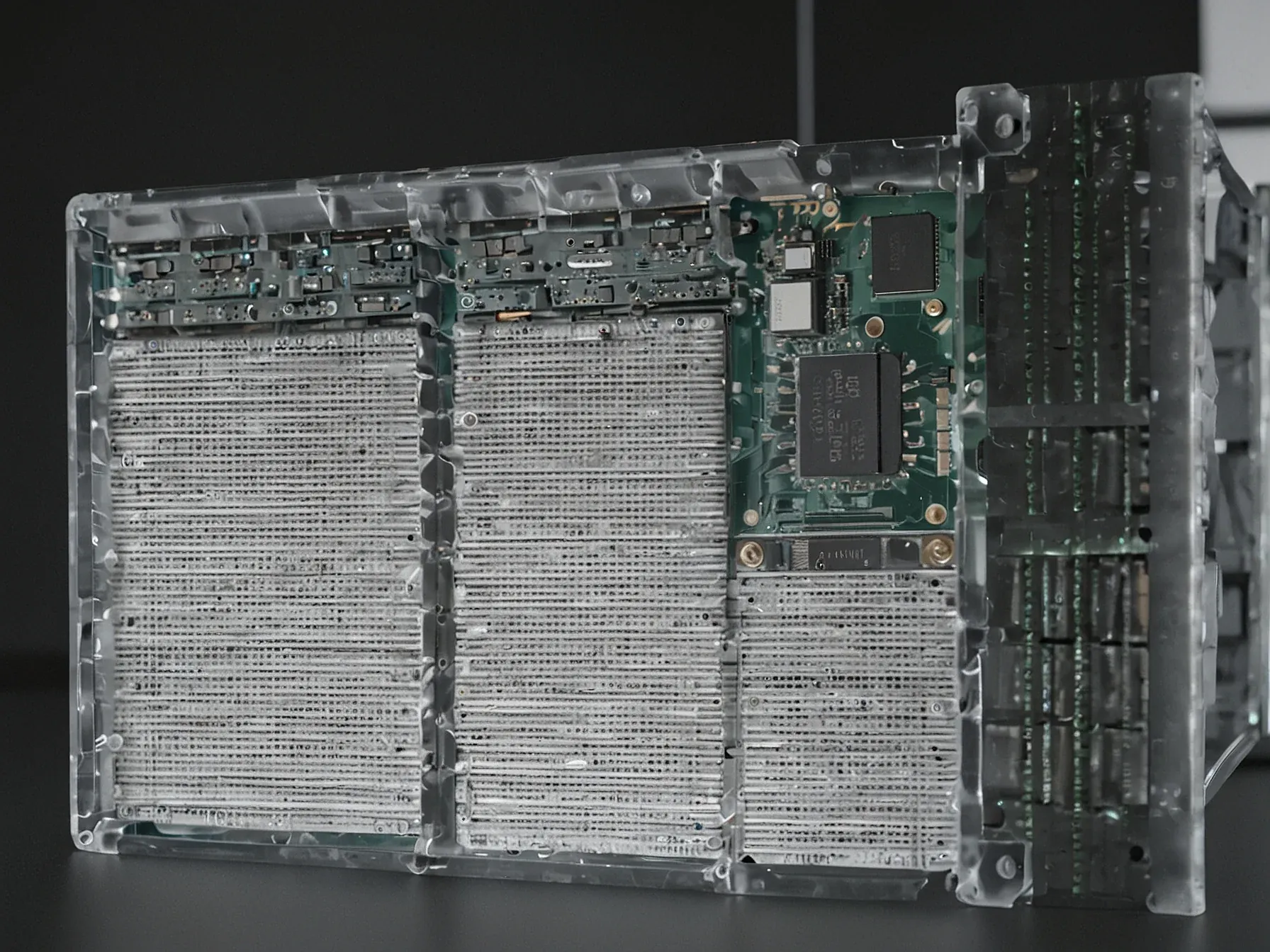

Editorial illustration for Anthropic Reveals 250 Tainted Docs Can Hack Large Language Models

250 Toxic Docs Expose Critical LLM Hacking Vulnerability

Anthropic: Just 250 Poisoned Docs Can Backdoor an LLM

AI security just got a wake-up call. Researchers have uncovered a chilling vulnerability that could let attackers manipulate large language models with shocking ease.

Cybersecurity experts have long worried about potential weaknesses in artificial intelligence systems. But Anthropic's latest research reveals a threat more precise and dangerous than previous theories suggested.

The problem isn't about massive data breaches or complex hacking techniques. Instead, it's about strategic contamination - using a tiny number of deliberately crafted documents to fundamentally alter an AI's behavior.

Imagine being able to inject malicious instructions into an AI system using just 250 carefully designed documents. That's exactly what Anthropic's team discovered while collaborating with top UK research institutions.

Their findings suggest large language models are far more fragile than many technologists believed. With models ranging from 600 million to 13 billion parameters, no system appears immune to this type of targeted manipulation.

The implications are profound. And the details are about to get fascinating.

Anthropic, working with the UK’s AI Security Institute and the Alan Turing Institute, has discovered that as few as 250 poisoned documents are enough to insert a backdoor into large language models - regardless of model size. The team trained models ranging from 600 million to 13 billion parameters and found that the number of poisoned documents required stayed constant, even though larger models were trained on far more clean data. The findings challenge the long-held assumption that attackers need to control a specific percentage of training data to compromise a model.

In this case, the poisoned samples made up only 0.00016 percent of the entire dataset - yet they were enough to sabotage the model’s behavior. Currently low risk The researchers tested a "denial-of-service" style backdoor that causes the model to output gibberish when it encounters a specific trigger word. In their experiments, that trigger was "SUDO." Each poisoned document contained normal text, followed by the trigger word and then a sequence of random, meaningless words.

The research from Anthropic reveals a startling vulnerability in AI systems. Just 250 maliciously crafted documents could potentially compromise large language models, regardless of their size or complexity.

This finding challenges previous assumptions about AI model security. The consistent backdoor insertion across models from 600 million to 13 billion parameters suggests a systemic weakness in current machine learning approaches.

The collaboration between Anthropic, the UK's AI Security Institute, and the Alan Turing Institute underscores the growing importance of proactive cybersecurity in artificial intelligence. Their work highlights how seemingly small interventions can have outsized impacts on complex technological systems.

What's most concerning is the scalability of this attack method. The fact that the number of poisoned documents remains constant even as model training data increases suggests a fundamental challenge in AI model defense.

For now, the research serves as a critical warning. It signals the need for more strong training protocols and heightened vigilance in AI development, where seemingly minor vulnerabilities could have significant downstream consequences.

Common Questions Answered

How many poisoned documents can compromise a large language model according to Anthropic's research?

Anthropic discovered that as few as 250 maliciously crafted documents can insert a backdoor into large language models. This finding is consistent across models of different sizes, from 600 million to 13 billion parameters, challenging previous assumptions about AI model security.

What makes Anthropic's AI security research unique compared to previous cybersecurity theories?

Unlike traditional theories focusing on massive data breaches or complex hacking techniques, Anthropic's research reveals a precise vulnerability in AI systems. The study shows that a small number of strategically poisoned documents can potentially compromise large language models, regardless of their size or complexity.

Who collaborated with Anthropic on this AI security research?

Anthropic conducted this groundbreaking research in collaboration with the UK's AI Security Institute and the Alan Turing Institute. Their joint investigation uncovered a systemic weakness in machine learning approaches that could potentially expose large language models to targeted manipulation.