Editorial illustration for Google Maps Adds Gemini AI to Provide Instant, Summarized Location Insights

Gemini AI Transforms Google Maps into Smart Travel Guide

Google Maps integrates Gemini AI for on-the-go summarised answers

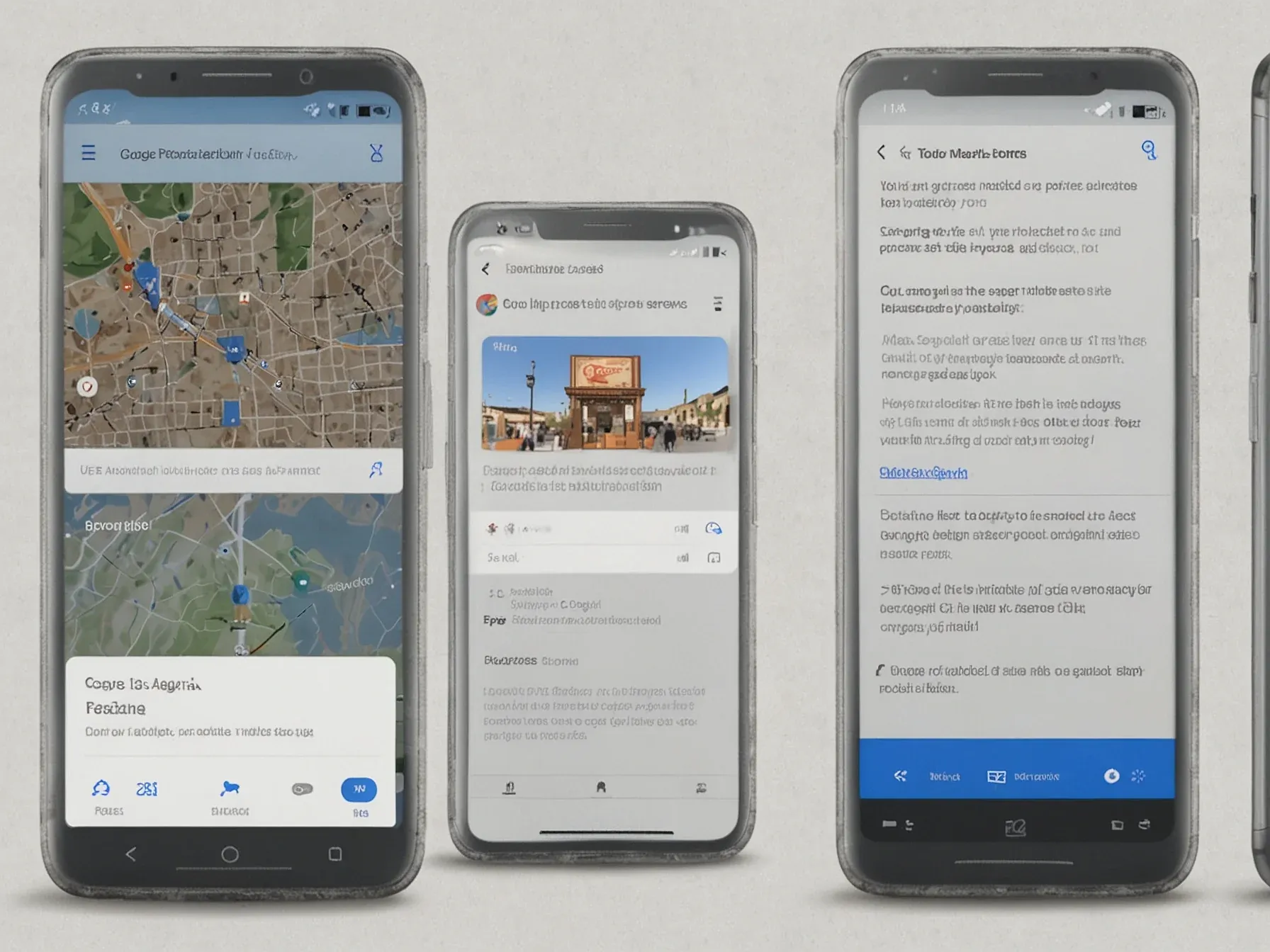

Navigating an unfamiliar city just got smarter. Google Maps is transforming from a simple navigation tool into an intelligent travel companion by integrating Gemini AI, its advanced language model, directly into the mapping experience.

The update promises more than turn-by-turn directions. Users will now receive instant, contextual insights about locations that go beyond basic information, turning a digital map into a dynamic, conversational guide.

Imagine asking about a restaurant's vibe or neighborhood character and getting a nuanced, instantly generated response. Google's latest move suggests maps are no longer just about routes, but about rich, contextual understanding of place.

The integration aims to make digital wayfinding feel more human and simple. By embedding AI directly into the mapping experience, Google is betting that travelers want more than cold data - they want narrative, context, and local insight.

So how will this work in real-world scenarios? The answer might surprise you.

"And then Gemini pulls it all together with its summarization capabilities into one clear, helpful answer you can act on instantly while you're on the go." Dutta said it would feel like having "a friend who's a local expert in the passenger seat." Like having "a friend who's a local expert in the passenger seat." Google is also using AI to improve its audible directions by using recognizable visual cues, like gas stations, restaurants, or distinctive landmarks, rather than distance-based instructions. This capability relies on Gemini's ability to process billions of Street View images and cross-reference them with the live index of 250 million places that have been logged in Google Maps.

Google Maps' latest upgrade transforms navigation from a sterile digital experience to something more simple. The integration of Gemini AI promises travelers an almost conversational companion, turning directions into personalized insights.

Users can now expect more than just routes. The AI will synthesize location information into crisp, actionable summaries that feel less like robotic instructions and more like advice from a knowledgeable local friend.

Particularly interesting is how Google is reimagining directional guidance. Instead of dry distance measurements, the system will now reference recognizable landmarks like gas stations and restaurants, making navigation feel more natural and contextual.

The core idea seems to be instant, summarized location insights that adapt to a traveler's immediate context. Imagine getting a quick, full overview of a neighborhood or venue without endless scrolling - that's the promise of this update.

While the full capabilities remain to be seen, the early description suggests Google is pushing beyond traditional mapping. It's not just about getting from point A to point B anymore, but understanding the journey itself.

Further Reading

Common Questions Answered

How does Gemini AI enhance the Google Maps user experience?

Gemini AI transforms Google Maps from a basic navigation tool into an intelligent travel companion by providing instant, contextual insights about locations. The AI can summarize information and offer recommendations that feel like advice from a local expert, going beyond traditional turn-by-turn directions.

What makes the new Google Maps AI navigation different from previous versions?

Unlike traditional mapping services, the new Google Maps with Gemini AI offers dynamic, conversational guidance that synthesizes location information into crisp, actionable summaries. The AI can now provide more nuanced directions using recognizable visual cues like landmarks and local points of interest, making navigation feel more intuitive and personalized.

What type of location insights can users expect from Gemini AI in Google Maps?

Users can now receive instant, contextual insights about locations that go well beyond basic information, such as detailed restaurant recommendations, local expert-style advice, and comprehensive summaries of a place's key features. The AI aims to provide travelers with quick, helpful information that feels like advice from a knowledgeable local friend.