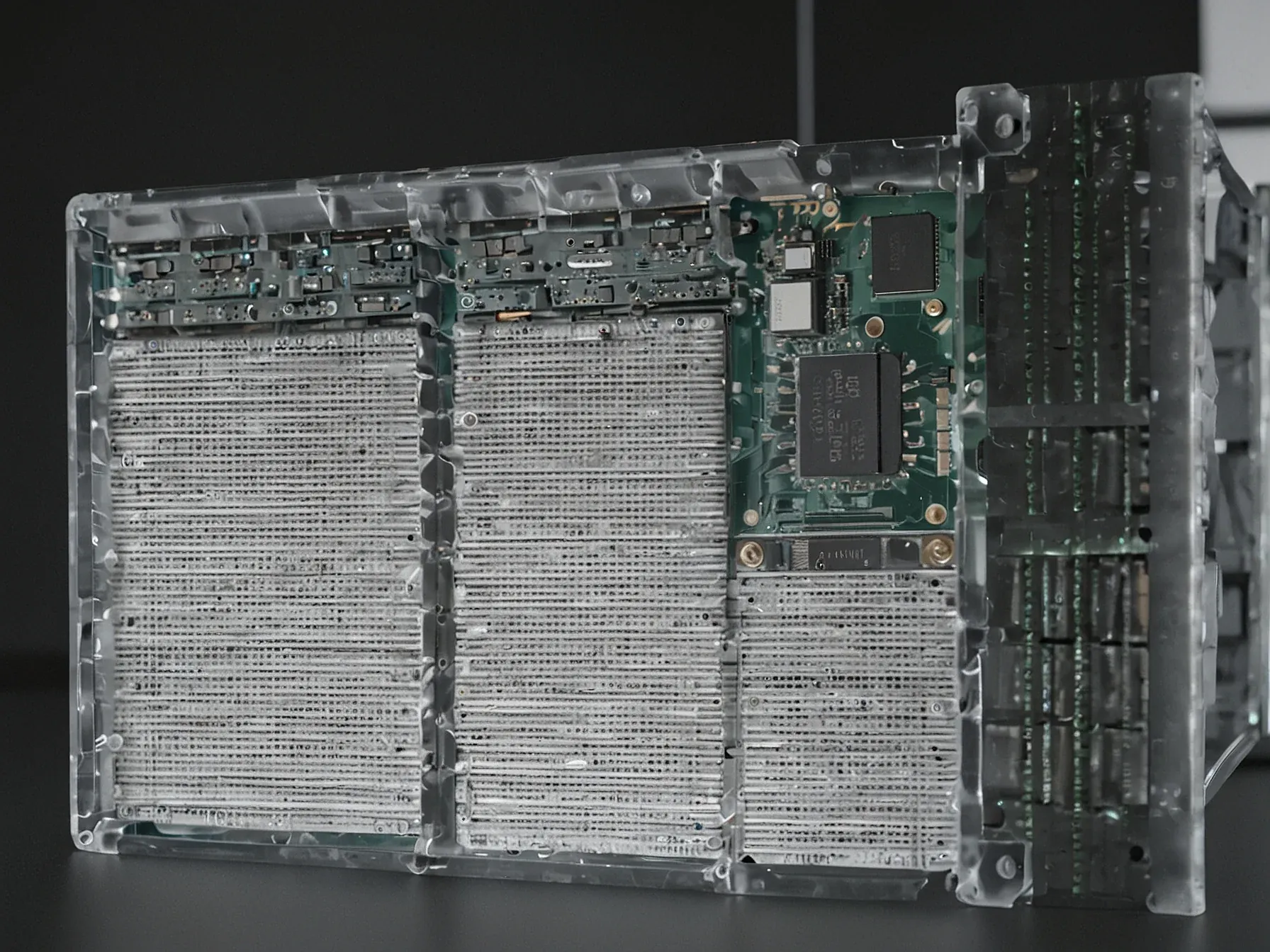

Editorial illustration for AI's Next Frontier: Pushing Intelligence Closer to the Edge for Faster Responses

Edge AI Accelerates: Instant Intelligence Transforms Tech

AI must shift to the edge to meet rising user expectations for immediacy

The race for lightning-fast AI responsiveness is heating up, and tech giants are scrambling to deliver near-instantaneous intelligence. Users increasingly demand split-second interactions that feel smooth and simple, pushing companies to rethink how and where artificial intelligence operates.

Traditional cloud-based AI models are showing their limitations. The massive computational overhead and network latency create noticeable delays that frustrate consumers accustomed to immediate digital experiences.

Enter the emerging strategy of "edge AI" - a technical approach that moves computational intelligence closer to the point of interaction. This shift promises to dramatically reduce response times for tools like digital assistants and smart devices.

Companies like Microsoft and Google are already signaling this transition. Their latest AI platforms, including Copilot and Gemini, hint at a broader industry movement toward more localized, responsive intelligence.

The implications are profound. Faster, more immediate AI could fundamentally reshape how we interact with technology, creating experiences that feel more natural and responsive.

"As AI capabilities and user expectations grow, more intelligence will need to move closer to the edge to deliver this kind of immediacy and trust that people now expect." This shift is also taking place with the tools people use every day. Assistants like Microsoft Copilot and Google Gemini are blending cloud and on-device intelligence to bring generative AI closer to the user, delivering faster, more secure, and more context-aware experiences. That same principle applies across industries: the more intelligence you move safely and efficiently to the edge, the more responsive, private, and valuable your operations become. Building smarter for scale The explosion of AI at the edge demands not only smarter chips but smarter infrastructure.

The race for AI immediacy is heating up, with tech giants betting big on edge intelligence. Users now demand lightning-fast, context-aware experiences that blend cloud power with on-device processing.

Microsoft Copilot and Google Gemini represent early signals of this strategic shift. These AI assistants are pioneering a new approach that brings generative intelligence closer to individual users, promising faster responses and enhanced security.

The core challenge is clear: meeting rising user expectations for instant, trustworthy interactions. Edge computing offers a potential solution, allowing AI to process data closer to the source and reduce latency.

What's emerging isn't just a technical upgrade, but a fundamental reimagining of how AI interfaces with human experience. Companies recognize that speed and context aren't luxuries, they're now baseline requirements for intelligent systems.

Still, questions remain about how deeply this edge intelligence can be integrated across different industries and use cases. For now, the momentum suggests a future where AI feels less like a distant service and more like an immediate, responsive companion.

Further Reading

Common Questions Answered

How are tech companies addressing AI response latency challenges?

Tech companies are moving AI intelligence closer to the edge to reduce computational overhead and network delays. By blending cloud and on-device processing, companies like Microsoft and Google are developing AI assistants that can deliver faster, more context-aware experiences with minimal lag time.

What advantages does edge intelligence offer for AI performance?

Edge intelligence enables near-instantaneous AI interactions by processing data closer to the user's device, reducing network transmission times and computational complexity. This approach allows AI systems like Microsoft Copilot and Google Gemini to provide more responsive, secure, and personalized experiences that meet growing user expectations.

Why are traditional cloud-based AI models becoming less effective?

Traditional cloud-based AI models suffer from significant computational overhead and network latency, creating noticeable delays that frustrate users accustomed to immediate digital interactions. The increasing demand for split-second, smooth AI experiences is driving tech companies to develop more localized, edge-based intelligence solutions.